Convert old site to static site

Having to maintain old servers serving old sites is horrible. You don’t want to take down the old site that is still drawing a few visitors but you also don’t want to cough up the server costs and maintenance time. I recently switched a couple of these old sites to statically server sites. It’s a relatively simple job and really saves you time and effort in the long run.

We’ll look at downloading and serving your old site through GitHub pages. We’ll pretend you want to change an old project called myproject hosted on myproject.com to GitHub pages.

Download site

The goal is to make a copy of the remote site to a local directory. We need a downloader that will crawl through our site with all it’s links and download all the HTML, CSS, JS and images that it finds. Good old wget does this tasks wonderfully:

wget --mirror --adjust-extension --page-requisites --no-parent -U 'Mozilla/5.0 (X11; Linux x86_64; rv:30.0) Gecko/20100101 Firefox/30.0' http://myproject.com

Note I added Firefox as a user agent. My requests were being denied. Adding the user agent let those request through to the server.

Exclude dirs

When mirroring a site you may want to exclude certain paths.

I recently ran into the problem that for every page the login link was being treated as a new page.

The current path was being appended to the login URL like: http://myproject.com/accounts/login?next=where/i/came/from.

To exclude all these pages/resources from being mirrored use the --exclude-directories argument.

wget --mirror --exclude-directories=/accounts/login ... http://myproject.com

Test locally

Run a local http server in the downloaded directory (called myproject.com), for example use the very simple node http server by executing:

http-server

Now open http://localhost:8080.

Your site should work just like the old one. Albeit functionality like search will no longer function. I ran into a problem with a WordPress site where some urls were not relative. For example, CSS files were being referenced like:

http://myproject.com/wp-includes/js/jquery/jquery.js

So content was being downloaded from the original site not the local web server.

Path replacement

Having the domain name in a url is not necessarily a problem. URLs should resolve correctly once it replaces the existing dynamic site. Personally however I dislike a hard coded domain name. To test it locally you would have to change your DNS resolution by editing the host file. Or you can change the URLs themselves by running the following command:

find . -type f -name "*.html" -o -name "*.css" -o -name "*.js" | xargs sed -i s,http://mydomain.com,,g

The previous command will change:

http://myproject.com/wp-includes/js/jquery/jquery.js

into

/wp-includes/js/jquery/jquery.js

GET parameterized resources

Asset URLs containing GET params will probably no longer work when statically served. The problem is that the params are ignored by the web server.

In practice this means a web server will serve the file /public/fonts/fontawesome-webfont.woff2 for a resource named /public/fonts/fontawesome-webfont.woff2?v=4.3.0 (Note the ?v=4.3.0).

The client browser will not load the resource.

The simplest solution is to make a none parameter copy of the original resource. e.g.:

cp "public/fonts/fontawesome-webfont.woff2?v=4.3.0" "public/fonts/fontawesome-webfont.woff2"

Serve it up

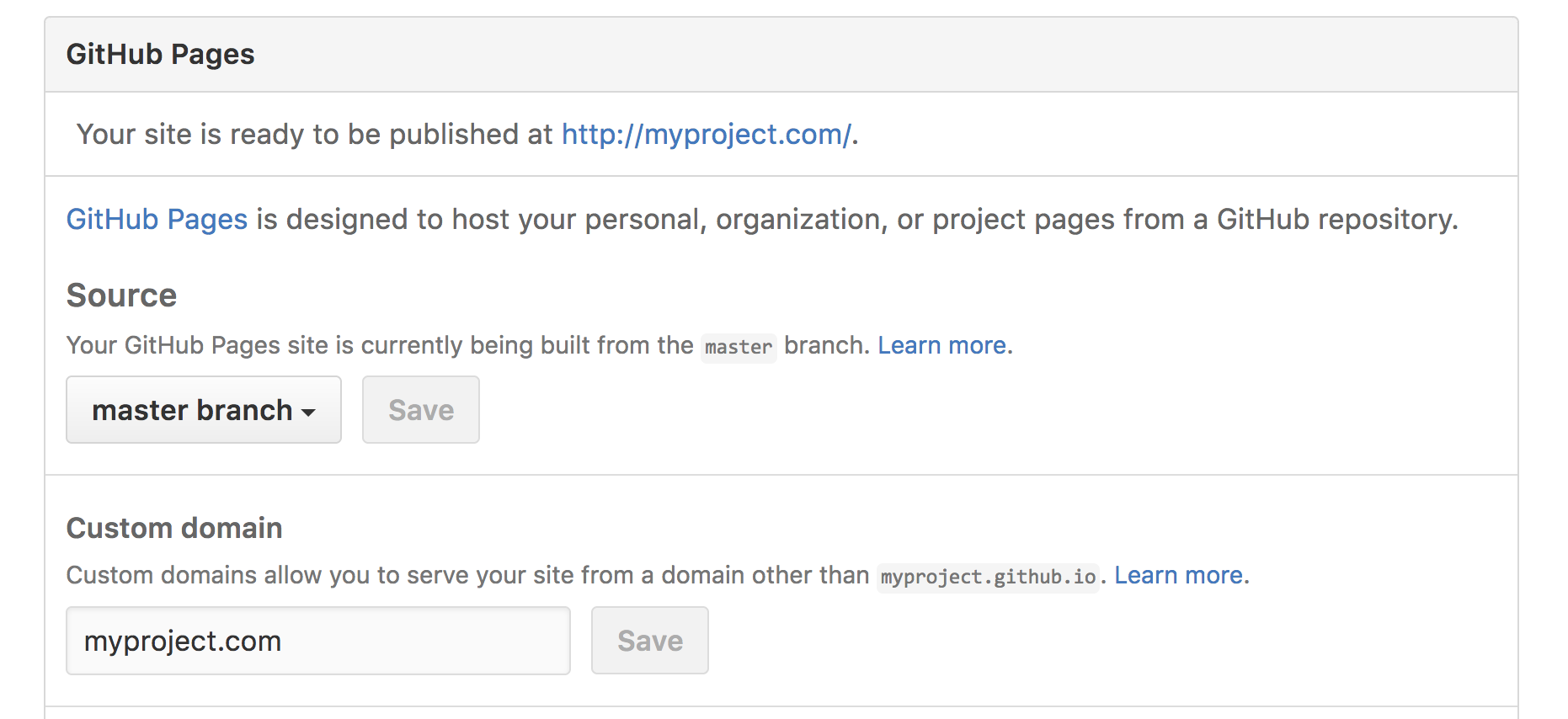

We are going to serve our static site through GitHub pages. Create a new GitHub repository named myproject. We can simply upload our entire download to the new github project (myproject) master branch. After doing that go to the option menu and change the domain to your custom domain.

Test GitHub pages custom domain

After doing this any request to the GitHub pages server with the correct host name will give you your static site. You can test that the site is being server correctly before switching over your DNS settings.

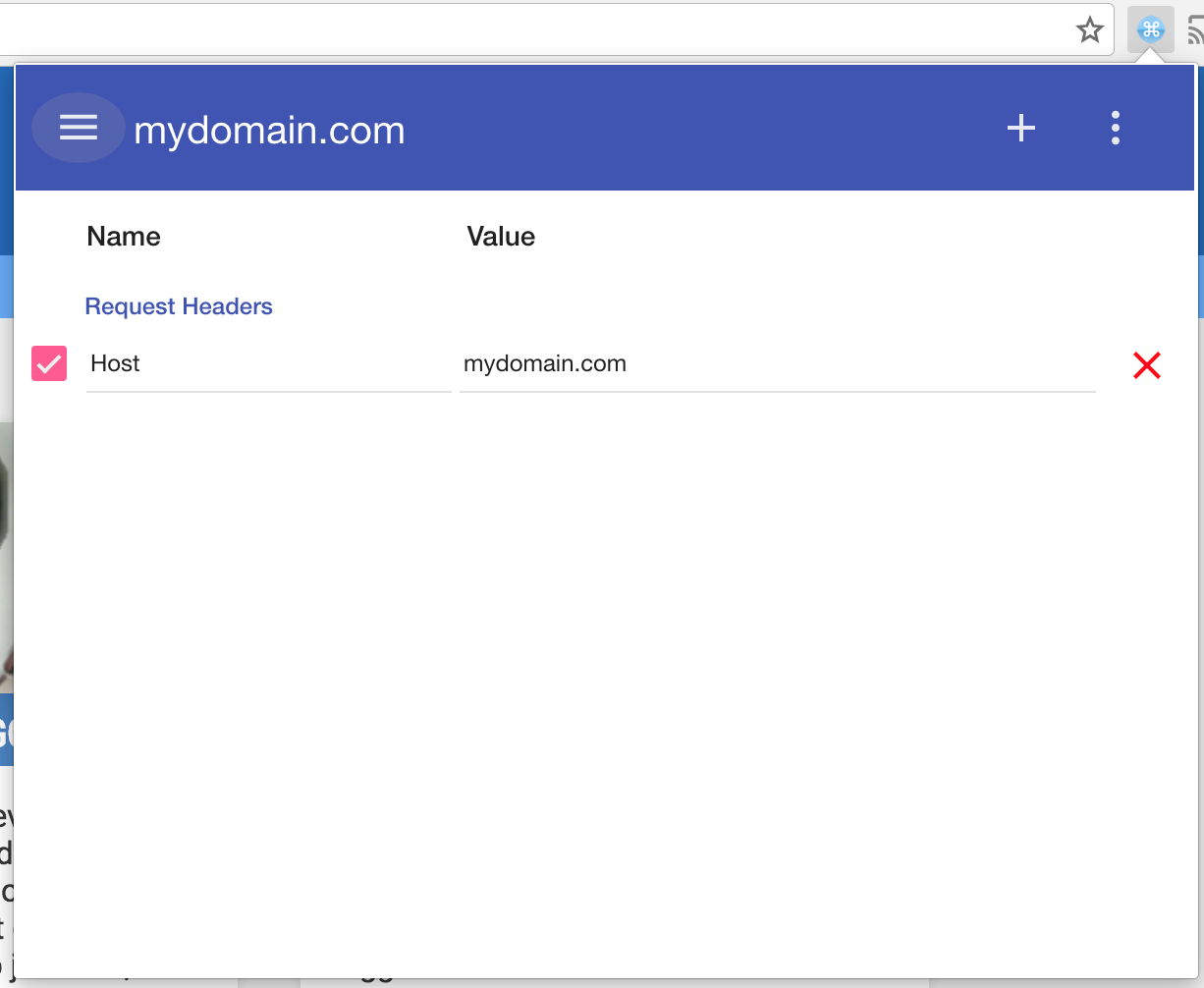

The trick is to navigate to GitHub project site while having some plugin change your HTTP request host parameter. I use a Chrome plugin called ModHeader. Using the plugin I change the Host to myproject.com:

After setting the Host attribute navigate to: https://myproject.github.io/

You should see your static site being served up just like it did locally.

GitHub pages DNS switch

To serve your site through GitHub pages change the A record(s) in your DNS records to:

- 192.30.252.153

- 192.30.252.154

See the GitHub help article.

Now wait patiently for you DNS changes to propagate after which you can take your old servers offline. And don’t forget to enjoy not having to maintain those old scrappy servers anymore.

Update 1 Nov 2017

Exclude dir wget parameter and GET params solution added.